Boosting(1) - AdaBoost

Contents

Adaptive Boosting 算法

Combine different classifiers & weights

Test data: $$T = \left\lbrace (x^{(1)}, y^{(1)}), (x^{(2)}, y^{(2)}), \cdots, (x^{(N)}, y^{(N)})\right\rbrace, \quad x \in \chi \subseteq R^N, y \in \lbrace +1, -1 \rbrace \\ \text{with weights: } D_i = (w_{i1}, w_{i2}, \cdots, w_{iN})$$

Classifier: $$G_m(x): \chi \to \lbrace +1, -1\rbrace, \quad G(x) = \sum\limits^M_{i=1} \alpha_m G_m(x) \\ \text{with weights: } A = (\alpha_{1}, \alpha_{2}, \cdots, \alpha_{M}) $$

AdaBoost Algorithm

Input: Training data $T$, classifier $G_m(x)$ Output: Ensemble $G(x)$

- Initialize data weights: $$ D_1 = (w_{11}, w_{12}, \cdots, w_{1N}), \quad w_{1i} = \frac{1}{N} $$

- For $m = 1,2, \cdots, M$: (a) Train data with $D_m = (w_{m1}, w_{m2}, \cdots, w_{mN})$ weights on $T$, generate $G_m(x)$ (b) Calculate error rate of $G_m(x)$ on $T$: $$ e_m = P(G_m(x^{(i)}) \neq y^{(i)}) = \sum\limits^N_{i=1} w_{mi}I(G_m(x^{(i)}) \neq y^{(i)}) \tag{1}$$ (c) Calculate $G_m(x)$’s weight $\alpha_m$: $$ \alpha_m = \frac{1}{2} ln \frac{1-e_m}{e_m} \tag{2}$$ (d) Update $D_{m+1}$: $$ D_{m+1} = (w_{m+1, 1}, w_{m+1, 2}, \cdots, w_{m+1, N}) $$ $$w_{m+1, i} =\begin{cases} \frac{w_{mi}}{Z_m} e^{-\alpha_m}, & G_m(x^{(i)}) = y^{(i)} \\ \\ \frac{w_{mi}}{Z_m}e^{\alpha_m}, & G_m(x^{(i)}) \neq y^{(i)} \end{cases} = \frac {w_{mi}e^{-\alpha_m y^{(i)} G_m(x^{(i)})}} {Z_m} \tag{3}$$ $$Z_m = \sum\limits^N_{i=1}w_{mi}e^{-\alpha_m y^{(i)} G_m(x^{(i)})} $$ In which $Z_m$ is normalization factor, which makes $D_{m+1}$ a probability distribution, keeping $\sum\limits^N_{i=1}w_{m+1, i} = 1$

- Combine all the $G_m(x)$: $$ f(x) = \sum\limits^M_{i=1} \alpha_m G_m(x), \quad G(x) = sign(f(x))$$

Note on implementation: The weights on datasets could be implemented with sampling the original dataset with a probability distribution of $D_i$

Intuitive Explanation

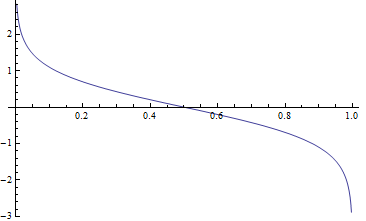

- Here is how $G_m(x)$’s coefficient, $ \alpha_m = \frac{1}{2} ln \frac{1-e_m}{e_m} $ varies through $e_m$, it’s easy to see that the more precise model will get a larger weight.

- Contrarily, from (3) it’s easy to tell that the samples get classified wrong will increase their weights, while the weights of the samples get classified correct will decrease, both in an exponential rate.