吴恩达 ML 公开课笔记(12)-Advice for Applying Machine Learning

Contents

Advice for Applying Machine Learning

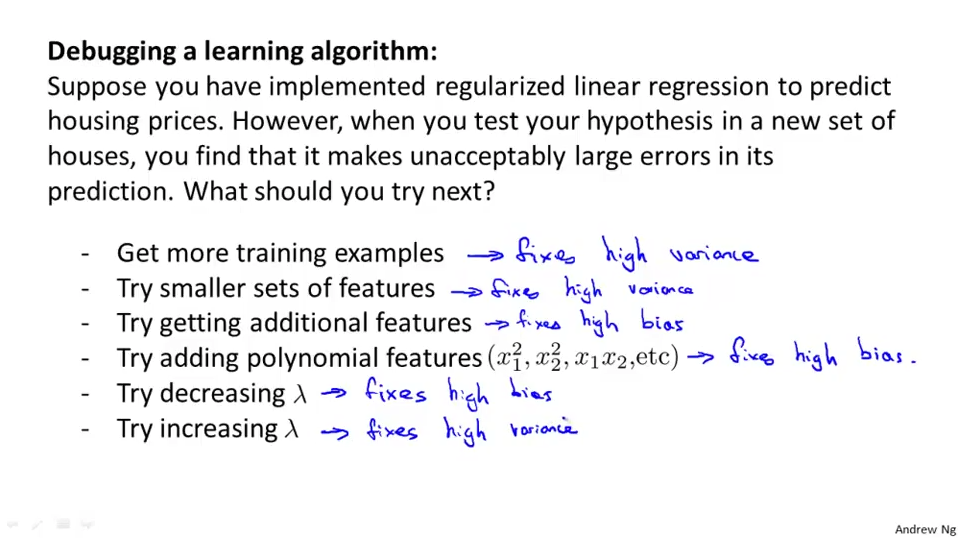

Debugging a learning algorithm

- Get more training data

- Try smaller sets of features

- Getting additional features

- Changing fit hypothesis

- Changing $\lambda$

Evaluate hypothesis

- Training/testing procedure

- Splitting data into training set and test set

- Learn parameters from training data

- Compute test error

- If $J_{train}(\theta)$ is low while $J_{test}(\theta)$ is high, then it might be overfitting.

- For logistic regression

- Misclassification error: $$ err(h_\theta (x), y) = \begin{cases} 1, & [h_\theta (x)] \text{ XOR } y = 0 \\ 0, & [h_\theta (x)] \text{ XOR } y = 1 \\ \end{cases} \\ TestError = \frac {1}{m_{test}} \sum\limits_{i=1}^{m_{test}} err(h_\theta (x), y) $$

- It’s an alternative option other than cost function

Model Selection

- Set d = degree of polynomial as the extra parameter other than $\theta$

- Try different models, calculate cost function on training set, choose the d with the minimum cost function. Problem: May fit training set well but not generalize to all data.

- Solution: Split training data into 3 pieces: training set(60%), cross validation set(20%), test set(20%)

- Using validation set to choose the model:

- Min(Training error)

- Min(Validation error), Pick

- Test error shows how well the model generalizes

Bias vs. Variance

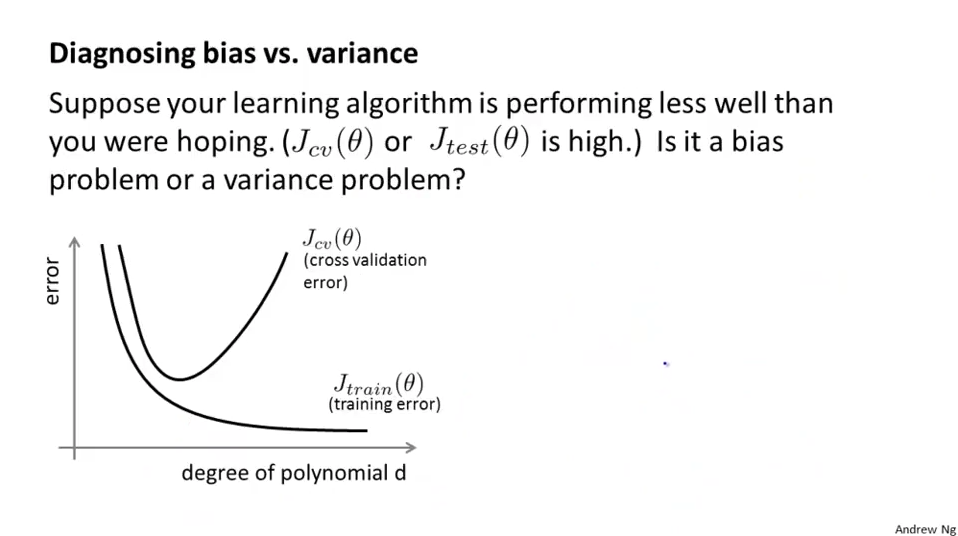

- Diagnosing Bias vs. Variance

- Cross validation error and training error with different degree of polynomial d

- The left side indicates high bias while the right side indicates high variance

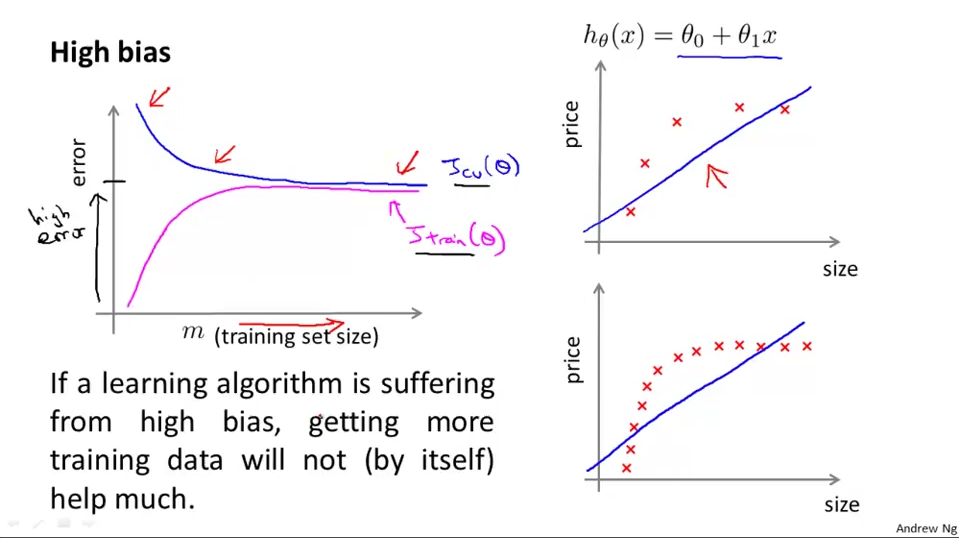

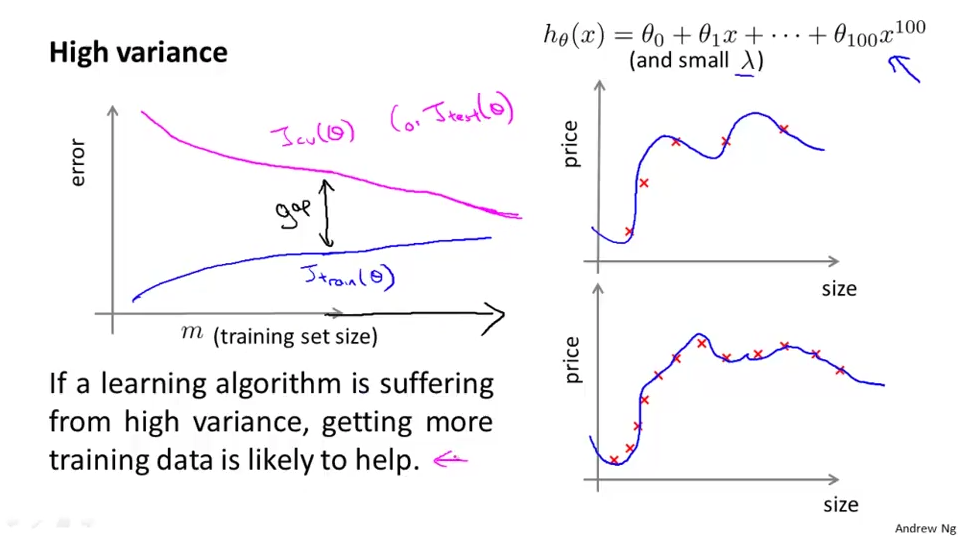

- ‘Error - training set size graph’ affected by different hypothesis

- The error with different training set size when the hypothesis is of high bias

- The error with different training set size when the hypothesis is of high variance

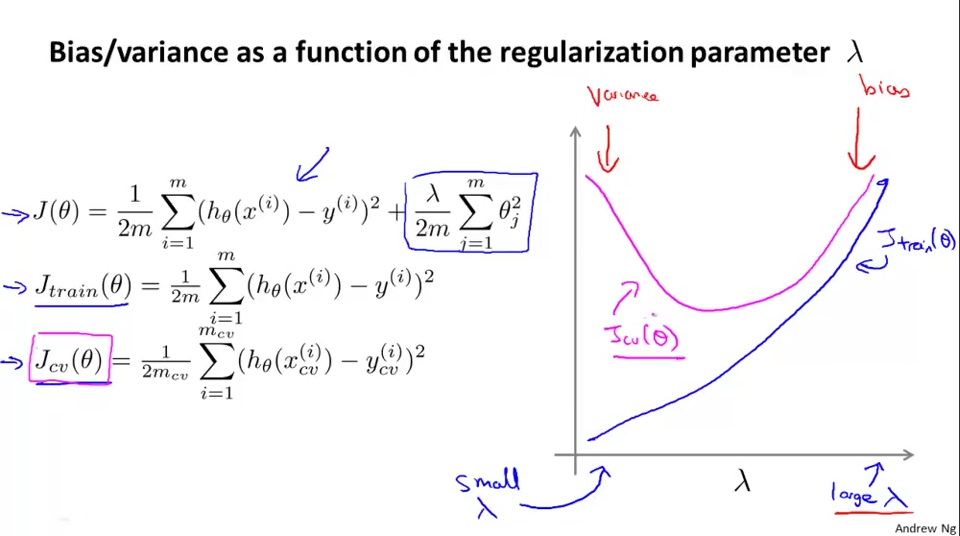

- ‘Error - training set size graph’ affected by different regularzation parameter

- Debugging a learning algorithm

Building a Spam Classifier

- Do a dirty and quick example in order to examine whether a method like stemming might help lowering the error

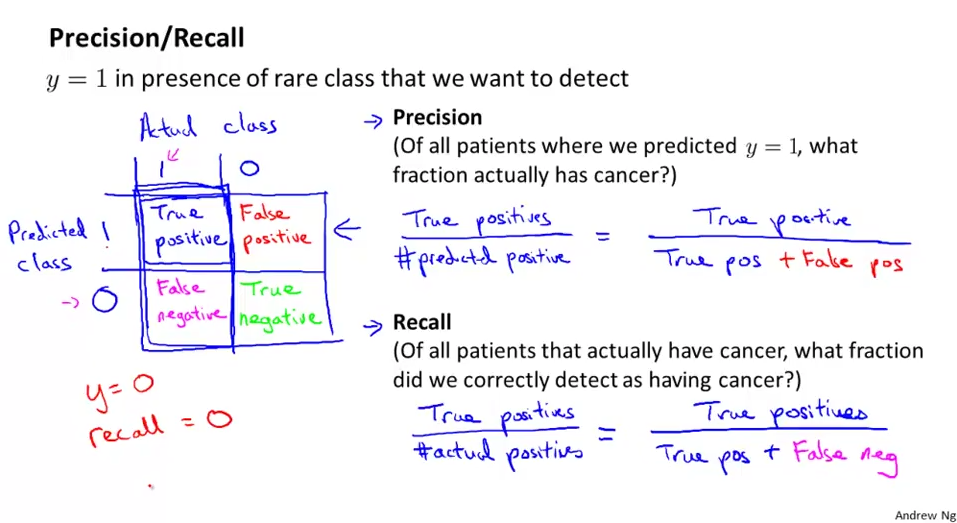

Handling Skewed Data

- Skewed data: A set of very unbalanced data

- Precision/recall

- High classfier threshold: High precision, low recall: Very confident, lots of overlook

- Low classfier threshold: High recall, low precision: Not very confident, but less overlook

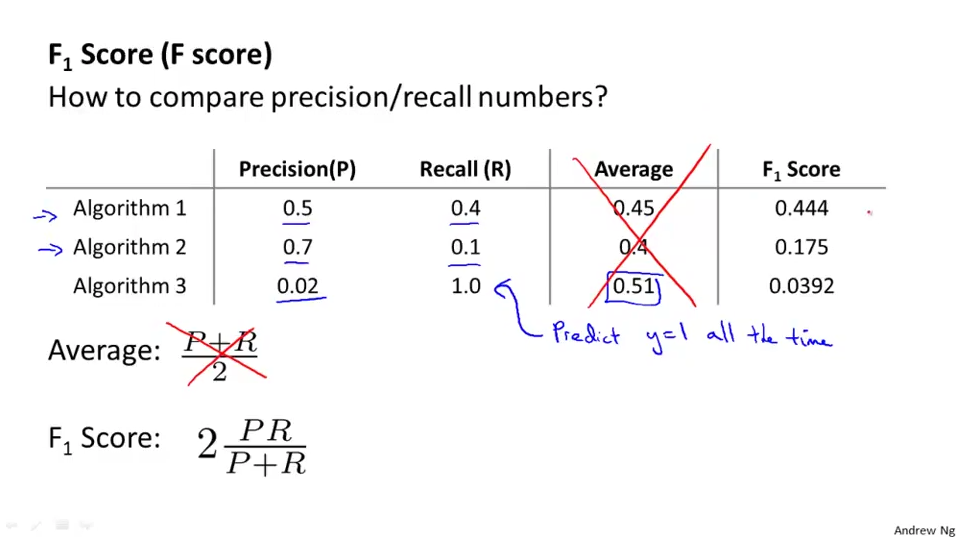

- Choose threshold automatically

- F score is better than average on deciding which algo to use based on precision/recall data

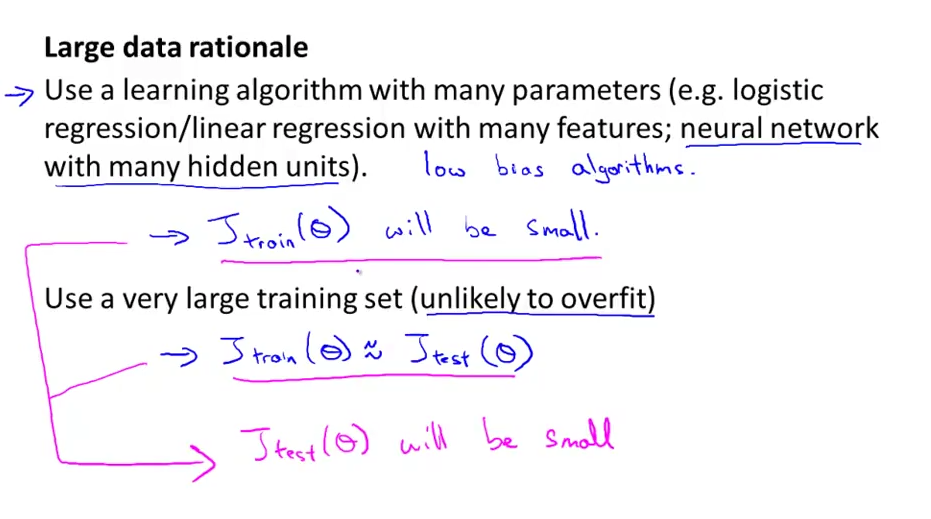

Using Large Data Sets

- Generally more training data will improve learning performance

- Having a significantly large set of data will improve learning performance: Low training error -> training error ≈ test error -> all errors are low